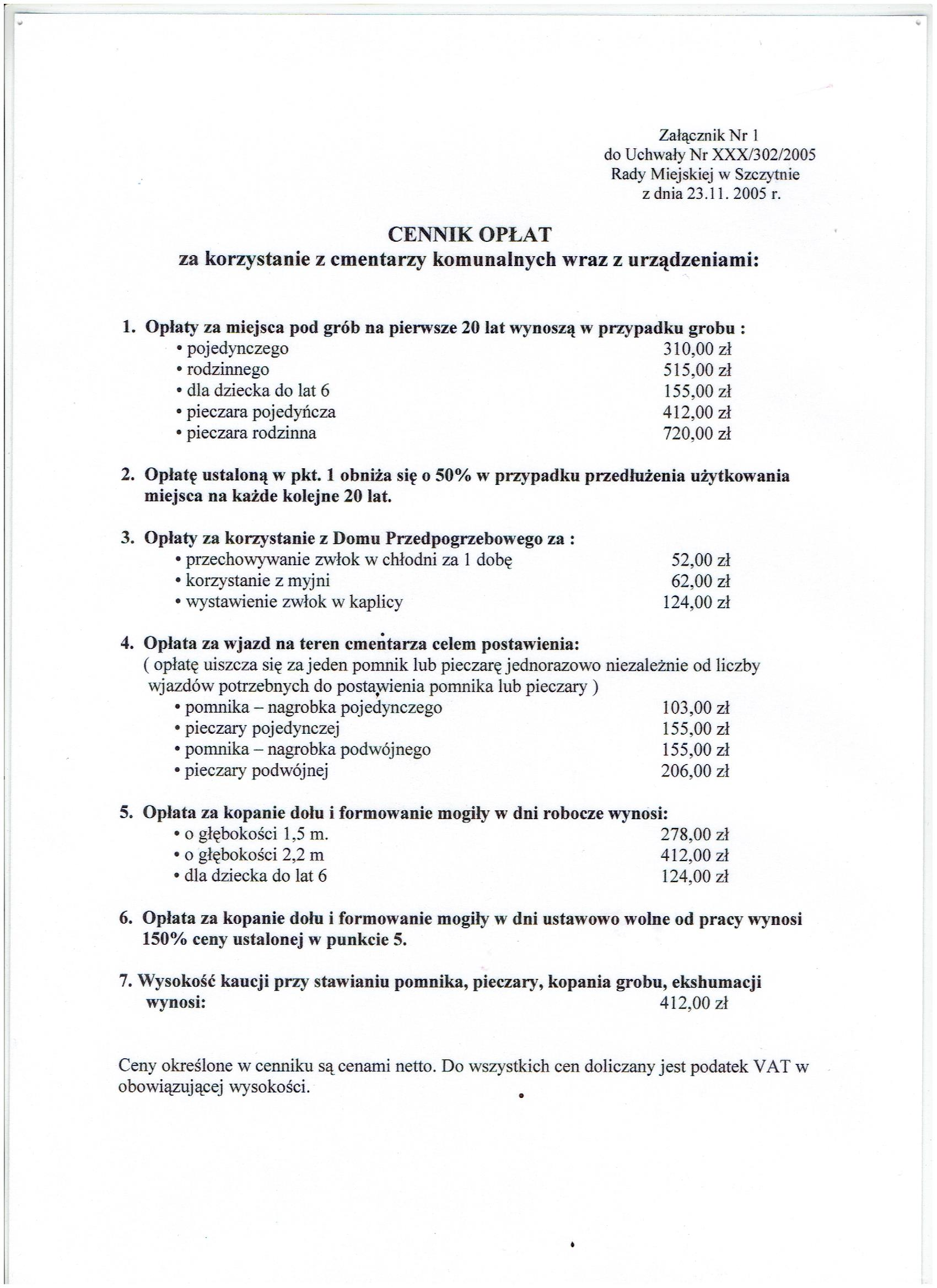

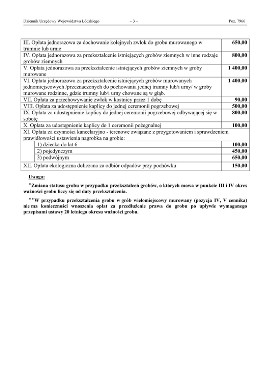

Nowy cennik oraz regulamin cmentarza przy ul. Dąbrowskiej w Tomaszowie. O ile rosną ceny? Czego nie wolno? | Tomaszów Mazowiecki Nasze Miasto

Rozdział I POSTANOWIENIA OGÓLNE § 1 1. Regulamin Organizacyjny Samodzielnego Publicznego Szpitala Klinicznego Nr 1 w Lublinie

Nowy cennik oraz regulamin cmentarza przy ul. Dąbrowskiej w Tomaszowie. O ile rosną ceny? Czego nie wolno? | Tomaszów Mazowiecki Nasze Miasto