Remington HC6550 vákuumos hajvágó-ÚJ! - Haj-, és szakállvágók - árak, akciók, vásárlás olcsón - Vatera.hu

VGR - Akkumulátoros hajvágó készlet, digitális kijelzővel, kiegészítőkkel (44 db) - SzépségEgészség.hu

Rowenta, TN9300 hajvágó vákuum technológiával, Titán hajvágó penge, Precíziós beállítás 1 mm-ig, 17 különböző beállítás - eMAG.hu

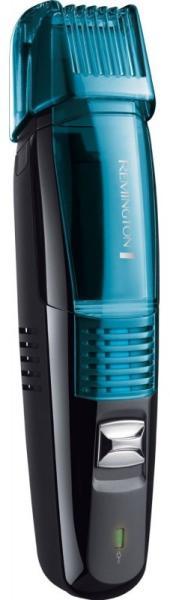

REMINGTON HC6550 vezeték nélküli vákuumos hajvágó szett - iPon - hardver és szoftver hírek, tesztek, webshop, fórum

REMINGTON HC6550 vezeték nélküli vákuumos hajvágó szett - iPon - hardver és szoftver hírek, tesztek, webshop, fórum

Remington HC6550 vákuumos hajvágó-ÚJ! - Haj-, és szakállvágók - árak, akciók, vásárlás olcsón - Vatera.hu

Remington HC6550 Hajvágó Vacuum - titán késes, litium akkus, 1,5-255mm-ig, 9 db feltéttel, hálózati + akkus üzemmod, Vácuum hajvágó, mosható pengékkel

REMINGTON HC6550 vezeték nélküli vákuumos hajvágó szett - iPon - hardver és szoftver hírek, tesztek, webshop, fórum

Philips Beardtrimmer Series 7000 BT7204/85 vákuumos szakállvágó, 0.5 mm-es precíziós beállításokkal, 20 rögzíthető hosszúságbeállítás, Fehér/Fekete - eMAG.hu