Femme D'affaires En Costume-cravate Confiant Femme Entrepreneur Employé De Bureau Succès Manager Portrait De Attrayant Costume De Fixation élégant Isolé Sur Fond Blanc | Photo Premium

Mode Portrait De Femme En Costume Noir, Cravate Papillon Et Chapeau à La Villa De Luxe | Photo Gratuite

Le meilleur costume : Pantalon bien coupée, cravate et chemise blanche, rien ne manque à Leighton qui lance ainsi une tendance - Puretrend

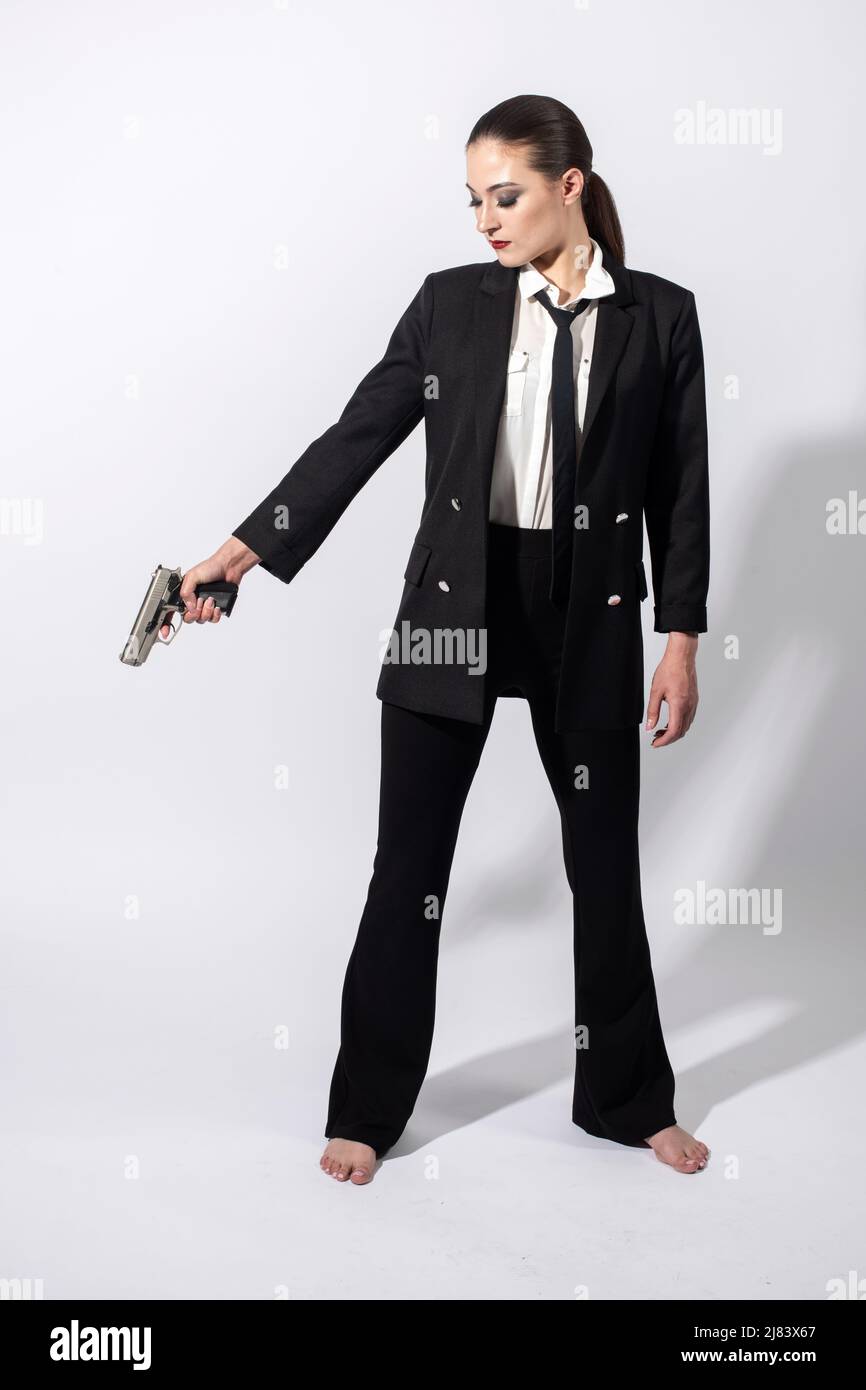

38 400+ Femme Cravate Photos, taleaux et images libre de droits - iStock | Une seule femme cravate, Femme rouille, Femme costume homme